Latent factors are hidden building blocks that shape user preferences and item characteristics. They are not directly observable but are inferred from the data through a process called dimensionality reduction.

These underlying characteristics or hidden dimensions explain the patterns in the interactions between users and items.Furthermore , they also influence the user interactions (like ratings, likes, preferences, views etc) with items but are not obvious from user-item matrix per se.

For instance, lets say “User A” consistently rates Sci-Fi movies with ratings of “3 and above“, then , it suggests that one of their latent factors or hidden preferences may be strongly aligned with Sci-Fi content.

Matrix factorization techniques like Singular Value Decomposition (SVD) can uncover patterns in user preferences.

For example, SVD might identify that User A has a strong preference for Sci-Fi, represented by higher weights in Sci-Fi-related factors. This helps the system predict that User A will likely enjoy other Sci-Fi movies. This is the core idea behind collaborative filtering, where the system learns user preferences to make better recommendations.

Let’s begin with a question………

Why can’t we use user-item matrix as it is or what is the idea behind decomposition ?

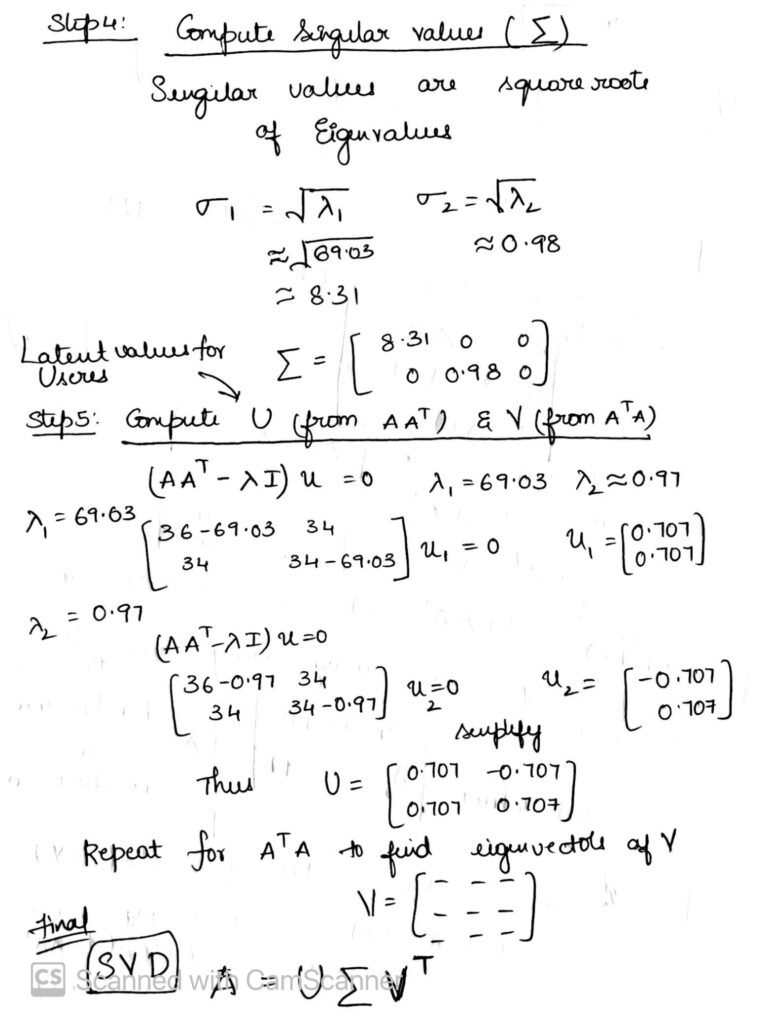

The user-item matrix ( let’s say A) might be very large and sparse, and it’s hard to find patterns just by looking at raw numbers. SVD helps by decomposing user-item matrix into three smaller, simpler matrices that, when multiplied together, approximate the original matrix( which is user-item matrix A).

![]()

- U – User preferences – represents the users and their latent factors (preferences)

- Σ (Sigma) – Overall importance or strength of these characteristics – is a diagonal matrix containing values that represent the “importance” of each factor

- VT – Movie characteristics – represents the movies and their latent factors (characteristics).

More on Σ ,

Matrix Σ (Importance of Factors)

- Σ shows how important each hidden factor is.

- It’s a diagonal matrix, so the big numbers on the diagonal says which factors matter most in explaining user preferences and movie types.

- For example, if the first factor (like Sci-Fi) has the largest value, it’s the most influential in shaping the recommendations.

How to calculate U , Σ and VT – Singular Value Decomposition (SVD)?

Let’s get into the details using an example.

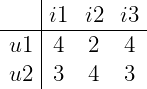

In the above example u1, u2 are two users and

i1, i2, i3 are the movie ratings given by users u1 and u2.

![]() represents user-item matrix

represents user-item matrix

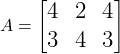

- Compute AAT and AAT

- Eigenvalues and Eigenvectors1 for AAT (to compute U)

- Eigenvalues and Eigenvectors for ATA (to compute V)

- Compute Singular Values

- Compute U (from AAT) and V(from ATA)

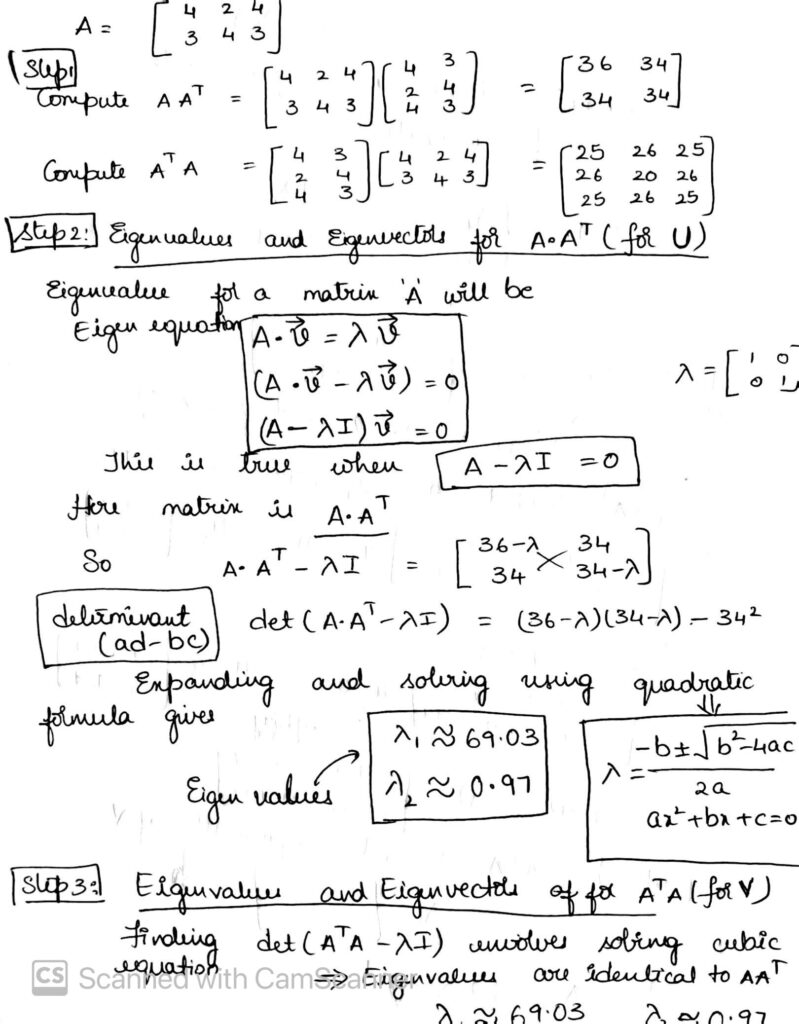

For a square matrix A and a vector v. If v is an eigenvector of A, then:

![]()

A is the matrix.

v is the eigenvector.

λ (lambda) is the eigenvalue.

Interpreting U and V

- User Matrix (U): Each row of U represents a user in the latent factor space2. The values in these rows indicate how much the user aligns with the underlying dimensions discovered during the decomposition. The main takeaway is

- A high value in a specific column of U might suggest that the user strongly prefers a particular genre or feature (a latent characteristic) .

- Users with similar rows in U are likely to have similar preferences.

- Item Matrix (V): Each row of V corresponds to an item and shows how it scores across the same latent dimensions.

- Items with similar rows in V tend to appeal to the same type of users.

- High values in certain columns for a given row in item matrix, may highlight dominant features of the items, like popularity or core appeal.

- Collaborative Insight: Together, U and V provide a framework for estimating the user-item interaction

Wrapping Up!

By interpreting U and V, we bridge the gap between raw data(like user-item matrix ) and actionable insights(personalizations or recommendations). Whether it is a recommendation system or analyzing user behavior, understanding these latent factors facilitate to uncover hidden patterns and deliver personalized experiences.

I hope this post helps you understand the role of latent factors and how they can be used in recommendation systems. If you have any questions or want to share your thoughts, feel free to leave a comment!

- Eigenvectors are special vectors that, when transformed by the matrix, don’t change their direction. Instead, they only get scaled by a number. This scaling number is the eigenvalue. ↩︎

- Latent factor space – It refers to a lower-dimensional space where high-dimensional data(like user-item matrix) is projected, capturing its most essential and meaningful characteristics. In the context of user-item interactions, the latent space is defined by latent factors, which are abstract features that influence the observed data but are not directly observable. ↩︎