In the AI space, vector databases are emerging as essential tools for handling unstructured data, such as images, audio or even text. These databases leverage vector representations of data to facilitate efficient searching, retrieval, and analysis. This blog delves into how vector databases operate, the process of generating vector embeddings, the models used, and the role of transfer learning in this context.

Vector Database

Unlike traditional databases that store data in rows and columns, vector databases store data as dense numerical vectors also called embeddings, that capture semantic meaning and complex relationships.They are designed to store, index, and query data represented as high-dimensional vectors while also enabling complex searches and semantic understanding. There are several areas where vector databases play a key role, such as recommendation systems, Natural Language Processing(NLP) and various other machine learning tasks.

I am certain there are many more than the below list, to give an idea, some of the popular choices for vector databases are..

- FAISS (Facebook AI Similarity Search) – a library designed for fast similarity search and clustering of dense vectors. It provides tools to build, index, and query vector spaces efficiently. Not a full-fledged database.

- Pinecone – Fully Managed Vector Database

- Weaviate1 – Open-Source Vector Database

Vector Embeddings

A vector embedding is a mathematical representation of data (like a word, sentence, images, audio, or huge document) as a fixed-size vector in a high-dimensional space2 capturing semantic meaning. The underlying idea is that semantically similar pieces of text will have similar vector representations.

Each and every word could be represented in high-dimensional vectors based on the mode that is used to generate embedding. The resulting representation is a dense high-dimensional vector.

[0.12, -0.45, 0.89, -0.23] - king

[0.11, -0.44, 0.88, -0.22] - queen

[0.10, -0.50, 0.85, -0.20] - man

[0.09, -0.47, 0.84, -0.21] - woman

[-0.5, 0.3, -0.8, 0.1] - appleThe above word embeddings when plotted, the related words form a group are closers to each other while the unrelated word “apple” stands alone in a different region.

Generating Vector Embeddings

The models used to generate vector embeddings are neural networks designed for specific data types.These models that are trained to capture the essential features or semantics of input data.

For text, common models include:

- Word2Vec (Skip-Gram3, CBOW4): Predicts the context of words or the word from its context.

- GloVe: Learns embeddings by analyzing word co-occurrence statistics in a text corpus.

- Transformer-Based Models (e.g., BERT, GPT, Sentence-BERT): Encodes text using attention mechanisms to capture rich contextual and semantic information5.

Workflow to Generate Embedding

- Data Preprocessing:

- For text : Tokenization – Splitting sentences into words or subwords and converting tokens into numerical representations.There are various NLP libraries that does this process , eg., TensorFlow tokenizer6

- For audio : Converting audio into features using Spectrograms

- For images : Normalization scales the pixel values to a specific range (e.g., between 0 and 1 or -1 and 1).

- Data Encoding:

- Input is fed to pre-trained or fine-tuned model that can generate embeddings.

- Intermediate layers capture more complex representations of input.

- Mostly penultimate(second from last) layer’s output is extracted as embedding vector. You want to know why 7?

- Fixed length vector:

- Final vector is fixed-length and is often dependent on the model parameters. For instance, if Sentence BERT model is used for embedding, then the resulting vector will be if 768 dimensions .

Storing Embeddings in Vector Databases

Vector embeddings are stored as high-dimensional vectors (nothing but arrays). The core purpose of a vector database is to provide an efficient mechanism for finding embeddings that are most similar to a given query embedding.

Indexing

When the dataset comprises of large number of high-dimensional vectors (e.g., embeddings from a machine learning model), finding the nearest neighbors of a query vector (based on similarity or distance) becomes computationally expensive. This scales linearly with the size of the database, which can be extremely slow for large datasets. To alleviate it , vector databases support various kinds of indexes to pre-organize the embeddings.

An index isn’t just about speed – it also determines the method of querying or how vectors are searched. Depending on the type of index used, the search behavior can vary. To name a few indexes ,

- ANN search (Approximate Nearest Neighbors) – uses and builds a graph structure where each node is connected to nearby vectors. Data structures like HNSW(Hierarchical Navigable Small World)are used to organize the vectors in a way that allows for efficient lookup of similar vectors.

- IndexFlatL2 – Brute-force, where every vector is compared with every other vector

- IndexIVFFlat – Clustered indexing

- FlatL2

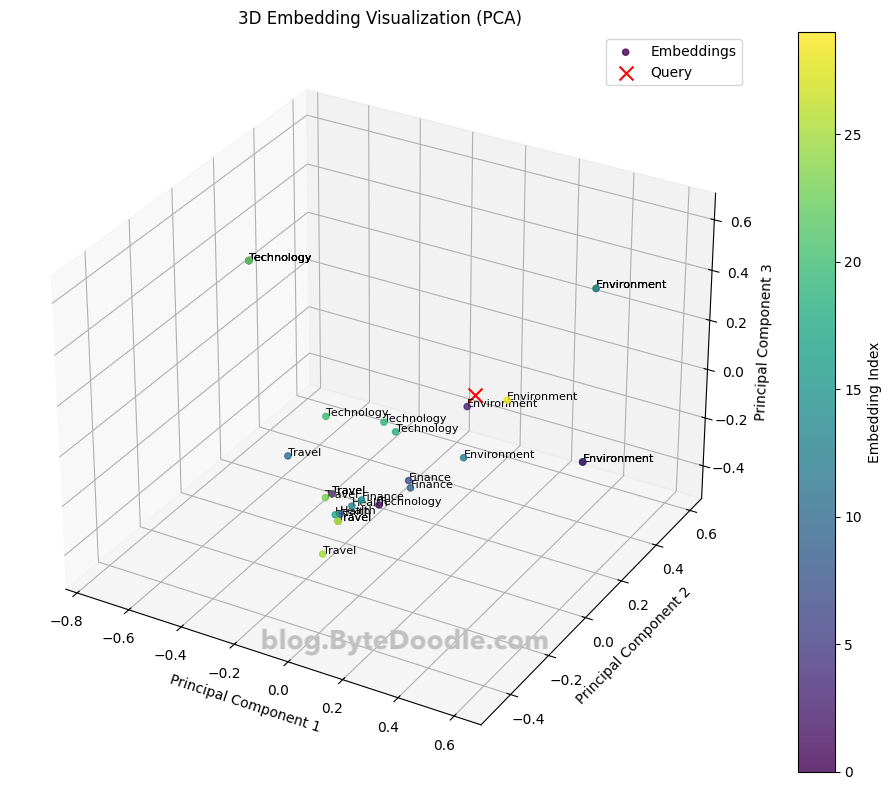

Example of Vector-Based Similarity Search

- I have used Weaviate opensource vector DB and created a sandbox cluster to store vector embeddings.

- SentenceBERT model with 384 dimensions , from SentenceTransformers package to generate embeddings.

Note on Transfer learning

Instead of training a new embedding model from scratch on the specific dataset (which would require a lot of data and computational resources), we “transfer” the knowledge learned by the pre-trained model to the task(search similarity), which is nothing but transfer learning.

Pre-trained Models: The models(Neural nets) that have been trained on massive datasets (e.g., billions of text documents, millions of images) to learn general-purpose representations of language, images, or other data modalities. They capture a broad understanding of semantics, syntax, and other important features. When we use them in the workflow, we just get the trained weights of the model.

If we want we can fine-tune, however in most of the cases pre trained models do well.

# Query string

query = "What are the effects of global warming?"

#Generated

{'content': 'The effects of deforestation on biodiversity.', 'title': 'Environment Article 4', 'category': 'Environment'}

0.6147304177284241

{'content': 'The importance of ocean conservation.', 'title': 'Environment Article 29', 'category': 'Environment'}

0.6375518441200256[-0.04799653962254524, 0.06843655556440353, 0.1375199556350708, 0.0811426192522049, 0.10077031701803207, 0.03025835193693638, -0.03746117278933525, 6.174238660605624e-05, -0.028748080134391785, 0.05203363671898842, 0.0057806456461548805, -0.0650133267045021, 0.007347447797656059, 0.0004147003637626767, -0.02567530982196331, 0.006057262886315584, -0.06908927112817764, -0.007177633699029684, ...................... -0.002986110979691148]

There will be 384 values (redacted for display) - Embeddings for query vectorIf you wonder what are these values or who defines these features, then it’s a fair question. These values are nothing but latent features that system generates based on the pre-training. If you want to know how latent features are derived or math behind it – please go through one of the post I have on Latent Features.

Github link for full code – vector_database_weaviate

Query Processing in a Vector Database

A vector database query is processed in these steps:

- Query Embedding: The query (text, image, etc.) is converted into a vector embedding using the same model as the stored vectors.

- Index Lookup (ANN): An index (usually HNSW) quickly identifies a subset of potential matches, avoiding a full database scan. This is an approximate search for speed.

- Distance Calculation & Ranking: The query vector is compared to the candidate vectors using a similarity metric (e.g., Cosine Similarity, Euclidean Distance, Dot Product). Results are ranked by similarity.

- Result Retrieval: The top-k most similar vectors (and their associated data) are returned.

Final Thoughts

Vector databases store data as high dimensional vectors, numerical representations capturing semantic meaning. This enables efficient similarity searches, retrieving relevant information based on conceptual similarity rather than keyword matching.This retrieval process is a crucial component of Retrieval Augmented Generation (RAG) systems as well.

Vector databases are also widely used in semantic search, recommendation systems, image and video retrieval, anomaly detections such as detecting unusual traffic (deviations) etc.

Hopefully, this has made vector databases a little less daunting. If not, well, at least we tried!

- Weaviate -Open Source vector database – https://weaviate.io/developers/wcs ↩︎

- High dimensional vector – A dense vector representation. ↩︎

- Skip-Gram – Embedding techniques developed as part of the Word2Vec model and it predict the surrounding context words given a target word. ↩︎

- CBOW – Continuous Bag of Words , an embedding techniques developed as part of the Word2Vec model. Predict the target word given its surrounding context words ↩︎

- Semantic Information – Semantic information can include the meaning of words, concepts, and ideas ↩︎

- TensorFlow Tokenizer library – https://www.tensorflow.org/text/guide/tokenizers ↩︎

- Why Penultimate layer’s output is extracted as embedding vector?

It has learned high-level, abstract features that capture the semantic meaning of the input. This is crucial for similarity search, where we want to compare items based on their meaning rather than low-level details. The penultimate layer, being one step before this task-specific transformation, provides a more general-purpose representation.

Earlier layers capture low-level features that are not semantically rich enough for many applications. For example, in text, an early layer might just represent individual words or characters, without capturing the relationships between them or the overall meaning of a sentence.

The final layer is often transformed by an activation function (like softmax for classification) that makes it less suitable for direct comparison using distance metrics like cosine similarity. Also, it’s very task-specific.

↩︎